Making ‘black-box’ machine learning easily understandable and useable by domain experts in order to make high quality decisions confidently.

Background

Machine Learning (ML) is currently facing prolonged challenges with the user acceptance of delivered solutions as well as seeing system misuse, disuse, or even failure. Significant barriers to the adoption of ML approaches exist in the areas of trust (of ML results), comprehension (of ML processes) and related workload, as well as confidence (in decision making based on ML results) by users. These fundamental challenges can be attributed to the nature of ``black-box’’ of ML for domain users when offering ML-based solutions.

Therefore, besides the development of ML algorithms, research of introducing human into the ML loop (human-in-the-loop ML) and making ML transparent is emerging as one of active research fields recently. Other terms are also used to refer to such researches, e.g. human interpretability in machine learning, or explainable artificial intelligence (XAI). We use Transparent Machine Learning (TML) in our context. TML aims to translate ML into impacts by allowing domain users understand data-driven inferences to make trustworthy decisions confidently based on ML results, and letting ML accessible by domain users without requiring training in complex ML algorithms and mathematical concepts. TML results in evolutionary improvements of the existing state of practice, for example,

- TML not only helps domain users proactively use ML outputs for informative and trustworthy decision making, but also allows users to see if an artificial intelligence system is working as desired;

- TML can help detect causes for an adverse decision and therefore can provide guidance on how to reverse it;

- TML allows effective interactions with ML algorithms, thus provides opportunities to improve impact of ML algorithms in real-world applications.

Technologies

We propose the following approaches:

- 2D Transparency Space: a framework integrating both ML experts and domain users as active participants into the overall workflow of ML-based solutions.

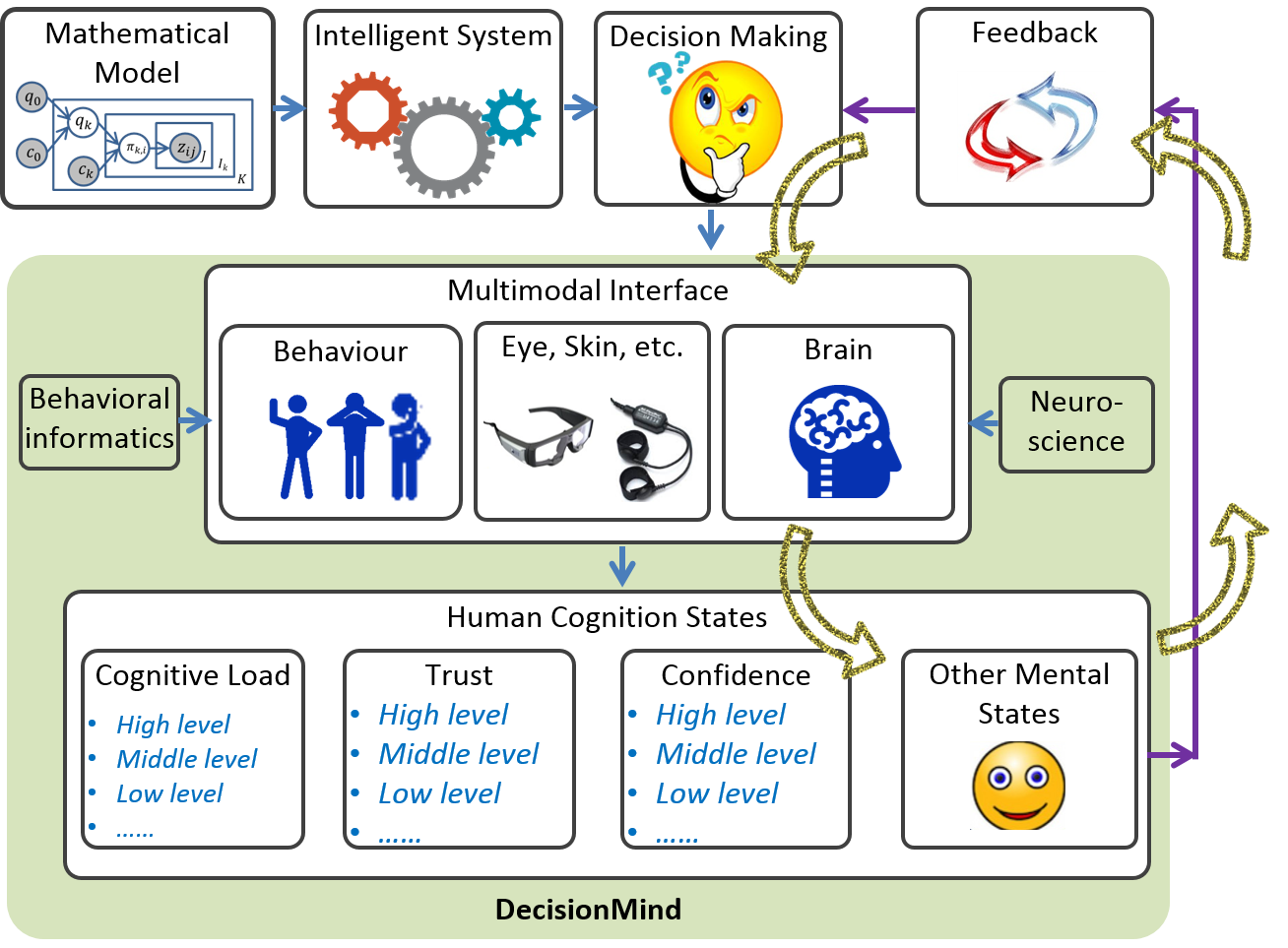

- DecisionMind: a pipeline revealing human cognition states ML-based decision making with multimodal interfaces.

Selected Publications

- Jianlong Zhou and F. Chen. DecisionMind: Revealing Human Cognition States in Data Analytics-Driven Decision Making with a Multimodal Interface. Journal on Multimodal User Interfaces, (Springer), 2017. (PDF)

- Jianlong Zhou, S. Z. Arshad S. Luo and F. Chen. Effects of Uncertainty and Cognitive Load on User Trust in Predictive Decision Making. the 16th IFIP TC.13 International Conference on Human-Computer Interaction (INTERACT 2017), 2017.(Reviewer’s Choice Award), (“The Brian Shackel Award” in recognition of the most outstanding contribution with international impact in the field of human interaction with, and human use of, computers and information technology). (PDF)

- Jianlong Zhou, Syed Z. Arshad, Xiuying Wang, Zhidong Li, David Feng, and Fang Chen. End-User Development for Interactive Data Analytics: Uncertainty, Correlation and User Confidence. IEEE Transactions on Affective Computing, 2017. In press. (PDF)

- Jianlong Zhou, Jinjun Sun, Yang Wang, and Fang Chen. Wrapping Practical Problems into a Machine Learning Framework —Using Water Pipe Failure Prediction as a Case Study. International Journal of Intelligent Systems Technologies and Applications, vol. 16, No. 3, pp.191-207, 2017. (PDF)

- Jianlong Zhou, Syed Z. Arshad, Kun Yu and Fang Chen. Correlation for User Confidence in Predictive Decision Making, OzCHI2016, 2016. (PDF)

- Jianlong Zhou and Fang Chen. Making Machine Learning Useable. International Journal of Intelligent Systems Technologies and Applications, vol. 14, no. 2, pp.91-109, 2015. (PDF)

- Jianlong Zhou, Constant Bridon, Fang Chen, Ahmad Khawaji, and Yang Wang. Be Informed and Be Involved: Effects of Uncertainty and Correlation on User’s Confidence in Decision Making. Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (CHI'15), Pages 923-928, 2015. (PDF)

- Jianlong Zhou, Jinjun Sun, Fang Chen, Yang Wang, Ronnie Taib, Ahmad Khawaji, and Zhidong Li. Measurable Decision Making with GSR and Pupillary Analysis for Intelligent User Interface. ACM Transactions on Computer-Human Interaction (ToCHI), vol. 21, no. 6, article no. 33, 2015. (PDF)